Look over there!

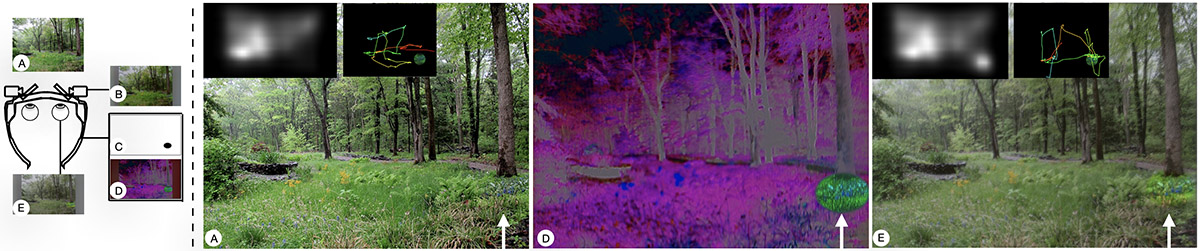

Overview on real-world guidance using saliency modulation in Augmented Reality glasses. (Left) The main steps of our approach that modulate the real world (A) by capturing the scene with an eye-aligned scene camera (B). Applying a mask (C) and a real-time saliency modulation image displayed in the AR glasses (D) allows for changing the perceived scene (E). (Right) (A) Original (un-modulated) scene with insets showing the saliency and example gaze path of study participant. (D) Overlay displayed in the optical see-through AR glasses. (E) Resulting scene when seen through the AR glasses with saliency modulation applied. Insets in (E) show the saliency and example gaze path of study participant. The white arrow pointing out the emphasised area is for illustration only.

Abstract: Augmented Reality has traditionally been used to display digital overlays in real environments. Many AR applications such as re- mote collaboration, picking tasks, or navigation require highlighting physical objects for selection or guidance. These highlights use graphical cues such as outlines and arrows. Whilst effective, they greatly contribute to visual clutter, possibly occlude scene elements, and can be problematic for long-term use. Substituting those overlays, we explore saliency modulation to accentuate objects in the real environment to guide the user’s gaze. Instead of manipulating video streams, like done in perception and cognition research, we investigate saliency modulation of the real world using optical-see-through head-mounted displays. This is a new challenge, since we do not have full control over the view of the real environment. In this work we provide our specific solution to this challenge, including built prototypes and their evaluation.

Acknowledgements: This work is supported by the Marsden Fund Council from Government funding (grant no. MFP-UOO1834)

Video: