Immersive Mobile Telepresence:

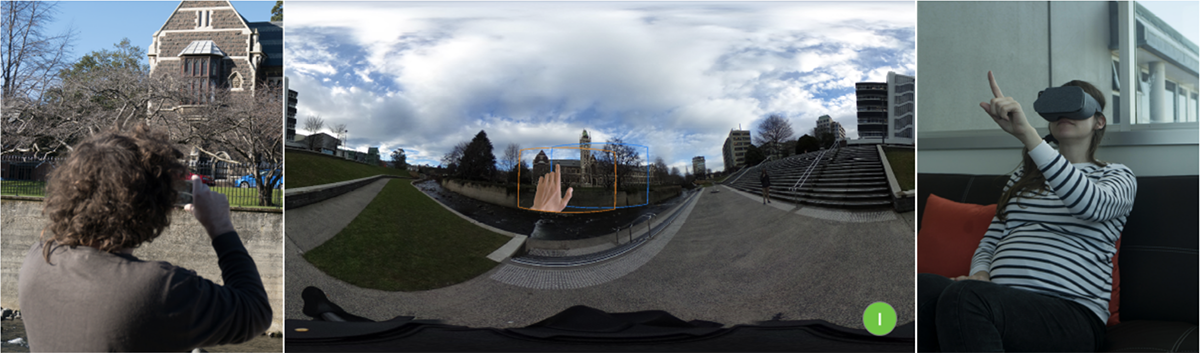

Our approach for immersive mobile telepresence: (Left) Using their mobile phone, the local user creates a spherical mapping of their environment using one of several proposed methods. (Right) A remote user views the shared environment through their own mobile phone (with optional head-mounted display) with their viewpoint independent from the direction of the local user’s camera. The remote user can point to objects of interest in this environment and have these gestures shown to both users with correct spatial context. (Center): The panoramic environment shared by the two users. Coloured outlines indicate the field of view of each user so they know where the other is looking.

Abstract: The mobility and ubiquity of mobile head-mounted displays make them a promising platform for telepresence research as they allow for spontaneous and remote use cases not possible with stationary hardware. In this work we present a system that provides immersive telepresence and remote collaboration on mobile and wearable devices by building a live spherical panoramic representation of a user's environment that can be viewed in real time by a remote user who can independently choose the viewing direction. The remote user can then interact with this environment as if they were actually there through intuitive gesture-based interaction. Each user can obtain independent views within this environment by rotating their device, and their current field of view is shared to allow for simple coordination of viewpoints. We present several different approaches to create this shared live environment and discuss their implementation details, individual challenges, and performance on modern mobile hardware; by doing so we provide key insights into the design and implementation of next generation mobile telepresence systems, guiding future research in this domain. The results of a preliminary user study confirm the ability of our system to induce the desired sense of presence in its users.

Acknowledgements: The authors wish to thank Oliver Reid for his help in conducting the user study, Katie Tong for her constant support, and the rest of the HCI lab for their valuable input throughout the duration of this research.

Presentation Video:

Paper Video: